5 Surface Geophysics

Surface geophysical tools are a class of nonintrusive geophysical instruments used to evaluate the subsurface. They indirectly measure physical properties of materials from signals produced by natural or generated sources and rely on contrasts in the properties of different materials. Surface geophysical tools are generally portable, can cover a large area and can “see” between wells. The portability of the tools and technological advances in computing power allow surface geophysical methods to be efficiently used in large-scale investigations (for example, area geology) and small-scale site characterizations (for example, identifying an UST at a corner retail store). Surface geophysical tools can also be used as part of remote sensing investigations (see Section 9.16) and can be paired with other tools discussed in this document (see the ASCT Selection Tool), as well as Section 3 and Section 4) as site investigations progress.

Surface geophysical tools are an indirect method. They can be used to image subsurface features that control contaminant transport and, under optimal conditions sense proxies of contamination, but cannot see concentrations of specific contaminants. They require correlation or interpretation and can be subject to misinterpretation. For these reasons, surface geophysical tools are most powerful when used in combination with conventional measurements when they have the potential to reduce characterization and monitoring costs. For example, surface geophysics is often performed to obtain additional data. Additional data collection could involve a drilling program designed to intercept anomalies detected, such as interfaces of interest, voids, faults, fractures. In addition, excavation activities could be designed to verify the depth of buried debris, tanks, or pipes. In many cases, surface geophysical tools can be used to identify the approximate locations of subsurface utilities, so the subsequent invasive phases avoid utility strikes.

5.1 How to Select and Apply Surface Geophysical Tools Using this Document

Projects typically have the following phases: data acquisition, data reduction and processing, modeling, and geological interpretation

- Data acquisition: the tool sends an impulse along a linear transect or across a 3D grid. The signal propagates through the subsurface and is picked up by the tool’s receiver. A single transect typically has constant data spacing, with resolution based on the target, and is perpendicular to the target. When multiple transects are combined, a 3D grid of stations and contoured results can be formed. The signal (the data that is desired) to noise (unwanted fluctuations in the measured data that may be spatial or temporal) ratio is improved by repeated measurements.

- Data reduction and processing: The raw data from the data acquisition transect (or survey) must be corrected for geometric effects controlling signal transmission and attenuation. This process is referred to as data reduction. This can include correcting data for unwanted variations unrelated to the variation in subsurface properties (for example, gravity survey is often corrected for surface topography). Digital signal processing, including time series analysis (for example, Fourier analysis) is used to enhance signals relative to noise and geometric effects or signal processing. Be aware that even if a good signal to noise ratio is obtained, target detection is dependent on resolution (see ASCT Surface Geophysics Tool Summary Table) for tool specific resolution) (Mussett and Khan 2000). Targets are recognized as an “anomaly” in the data (for example, values are above or below the surrounding data averages). However, not all geophysical targets produce spatial anomalies.

- Modeling: Data modeling occurs following data reduction. The intent of the model is to describe the variation in subsurface properties that explain the acquired datasets. The model should only be as complex as the data allows. The modeling process falls into two exercises: (1) forward modeling and 2) inverse modeling. Forward modeling takes a model for the distribution in subsurface properties (like a geologic cross section) and uses a mathematical algorithm to approximate the geophysical response that might be seen with a tool (for example, given some set of variables, what is the result). Inverse modeling starts with having observed geophysical data (typically obtained from a tool) that is used to produce a model of the subsurface physical property distribution from the data (for example, when given some measurements, what caused them). A simple example is using the arrival times of seismic p-waves (See Section 5.4) to determine depth to the bedrock interface. A more sophisticated example is demonstrated by the Scenario Evaluator for Electrical Resistivity (SEER) tool discussed in Section 5.2.2, which generates an estimate of the 2D resistivity distribution from multiple samples of the electrical field induced by injecting electrical current into the Earth.

The model dimensionality depends on the data coverage. It may be a 1-D vertical profile from a sounding around a single point, a 2D model from a single profile of data or a 3-D model from a set of parallel transects. Many geophysical models suffer from being non-unique; the models contain more cells (or parameters) than independent measurements acquired. It is then impossible to find a “unique” model without additional information. For this reason, additional assumptions are made to constrain the model.

Models are inevitably simpler than reality as the heterogeneous nature of the subsurface is never fully captured. Models produced from tomographic imaging methods (for example, electrical resistivity imaging or seismic tomography) usually employ a smoothness constraint to generate a minimal structure image from the range of plausible non-unique models. Images are thus a blurry representation of the subsurface, making it difficult to discern the exact shape and size of anomalies, especially when stations are not close enough to reveal all details of the signal (Mussett and Khan 2000).

- Interpretation: Following modeling, the results are interpreted into a geological or site characterization context. For example, a region of higher EC could indicate the presence of higher salinity groundwater. However, interpretations are typically non-unique as many geophysical properties respond to a wide range of physical and chemical variations. The geophysical model must be combined with all available data (including geologic, geophysical, outcrops, and chemical data) to update the site conceptual model (Mussett and Khan 2000).

Answering of the following targeted questions about site characteristics is the first step in identifying which surface geophysical tool, or series of tools, can be used to meet the project goals:

- What is the objective of the project? For example, is the focus on determining geology? Is the focus on locating the water table? Is the focus on identifying a metallic object such as a utility or buried drum?

- What is the size of the site? For example, is it a large expansive site so that extensive transects can be installed? Is the property a small corner retail store?

- What is the resolution needed? For example, is large-scale resolution sufficient or is a finer-scale needed?

- What is the depth of the feature? For example, is it shallow or is the feature expected to be deep?

- What comprises unconsolidated and consolidated material? For example, is it dry, sandy soil? Does it contain thin layers?

- Are there cultural features (such as roads, heavy equipment, power lines, electrical boxes) that could interfere with the results and result in poor data quality?

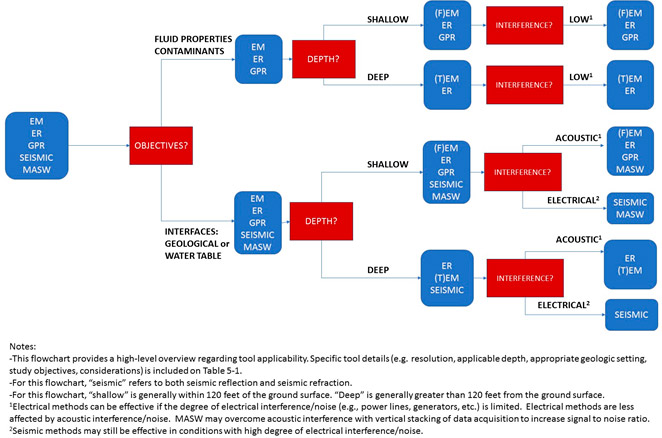

Figure 5‑1 provides a high-level decision flow chart for selecting and applying the surface geophysical tools discussed in this section. The Geophysical Decision Support System (Harte and Mack 1992) can provide additional decision-making support.

The ASCT Surface Geophysics Tool Summary Table provides a screening process to determine tools to use based on site and project parameters and project objectives. The USGS Fractured Rock Geophysical Toolbox Method Selection Tool (USGS 2018b)can be used to select appropriate tools. More than one tool may provide the data required. By using different tools to collect similar data, supporting evidence is provided and data confidence for site characterization is improved. ASCT Surface Geophysics Checklists (.xlsx version) are also provided to support tool selection and use and project management.

Figure 5‑1. Flow chart summarizing major decision factors when selecting surface geophysical tools.

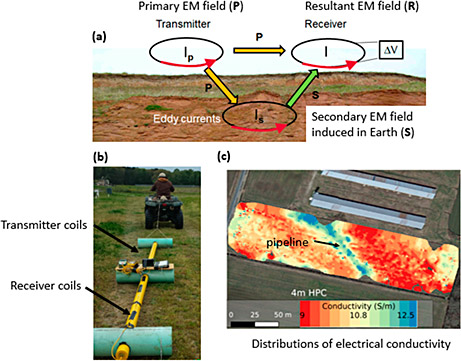

Tools discussed in this section include ERI, ground penetrating radar (GPR), seismic reflection and refraction, multichannel analysis of surface waves (MASW), and both time domain and frequency domain EM surveys.

5.2 Electrical Resistivity Imaging

The ERI method, also known as electric resistivity tomography (ERT), is used to noninvasively determine the spatial variations (both laterally and with depth) of the electrical resistivity of the subsurface [see (Binley 2015) for a recent review]. Electrical resistivity describes the intrinsic resistance of the subsurface to transport an electrical charge via conduction mechanisms. The reciprocal of resistivity gives the EC of the subsurface. Electrical resistivity is a valuable geophysical property to measure as it varies over many orders of magnitude and depends on several physical and chemical properties of interest in high-resolution site characterization.

The electrical resistivity of the subsurface is a function of lithology (porosity, surface area), pore-fluid characteristics (for example, saline water, fresh water, NAPL), water content, and temperature. Ion-rich pore fluids, formations with high interconnected porosity, and formations with a high surface area (for example, fine-grained formations, especially clays), increase the EC of the subsurface, which decreases resistivity. Unsaturated soils increase resistivity (air is a perfect insulator), as does the presence of air-filled voids and or free-phase gasses. The presence of metallic features (for example, ore minerals, infrastructure) also increases EC.

The sensitivity of ERI to multiple subsurface physical and chemical properties makes ERI a highly versatile surface geophysical method. It can be used to image the hydrogeological framework controlling groundwater transport, the distribution of inorganic contaminants in groundwater, and variations of moisture content in the subsurface. This versatility also means that ERI results in nonunique interpretations of subsurface properties, as multiple unknown factors influence subsurface resistivity.

Resistivity imaging has many advantages relative to invasive methods of exploring the subsurface and other geophysical technologies. The primary advantages of the technology result from the (1) wide range of resistivity encountered in geological media, and (2) strong dependence of resistivity on multiple subsurface physical and chemical properties (including moisture content, porosity, fluid salinity, and grain-size distribution). Consequently, ERI has a wide range of potential applications.

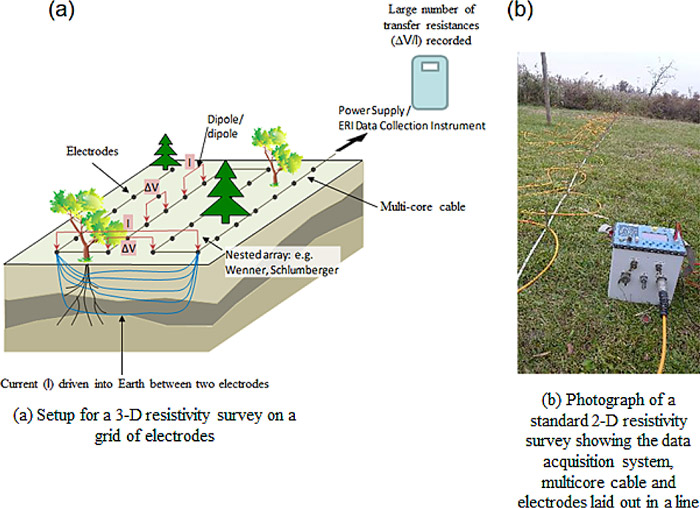

5.2.1 Use

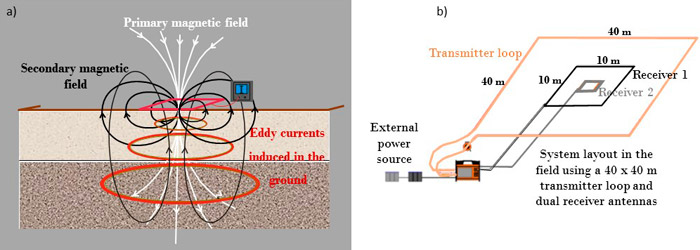

Electrical resistivity relies on galvanic (direct) contact between the transmitting instrument and the Earth which differentiates it from the EM methods described elsewhere in this section. The basic measurement is obtained using four electrodes placed on the surface of the Earth or in boreholes for more specialized cross-hole applications. Two current electrodes are used to drive electrical current ( ) into the subsurface and a second pair of potential electrodes record the resulting electrical potential difference between the electrodes. The source of electrical current is a transmitter (typically a few hundred Watts capacity for site investigation applications) and voltage differences are recorded using a receiver that is synchronized with the transmitter. An overview of the survey is given in Figure 5‑2.

The transfer resistance is calculated for each measurement that is often converted to an apparent resistivity. The apparent resistivity represents the equivalent resistivity for a homogenous Earth that results in the measured transfer resistance. These data are representations of the raw measurements. Inverse methods are used to generate images of the variations in the actual resistivity structure (for a heterogeneous Earth) beneath the electrodes.

The electrodes are usually rods or spikes driven into the ground at regular intervals. In some cases, it is necessary to increase the size of the electrodes to reduce the contact resistance (more technically, impedance) between the metal and porous ground. High-contact resistances reduce the current flowing in the subsurface and, consequently, the resulting voltage differences at the receiving potential electrode pairs (leading to lower signal-to-noise ratio). Large electrodes can help improve the signal-to-noise ratio, but their use may violate basic assumptions when interpreting data.

Figure 5‑2. Basic components of a resistivity imaging.

Source: Rutgers University Newark, Lee Slater, Used with permission

Electrode configurations can include:

- nested arrays, such as the Wenner array shown in Figure 5‑2, which are popular for sensing horizontal interfaces in the Earth.

- the Dipole-Dipole array, where the current injection and potential recording electrode pairs are separated by some integer multiple of the electrode spacing. These arrays are popular for sensing vertical contacts in the Earth and result from the different sensitivity patterns that arise based on the relative locations of the four electrodes.

The most common application of ERI is in the form of a 2-D transect, where the objective is to obtain a 2-D cross section of the resistivity structure of the Earth. Large transects are typically built up using a set number of electrodes and associated cabling that is progressively rolled along to cover extensive terrain. A major limitation of the 2-D transect is that the imaging (see below) results in a model of the resistivity structure that is constant in the plane perpendicular to the image. Such a 2-D earth assumption may be reasonable in some cases (for example, a resistivity survey perpendicular to a buried pipeline), but in most cases the subsurface is inherently 3-D. A 3-D resistivity survey requires electrodes to be placed on a grid, rather than a survey line. Commercially available software packages for processing resistivity imaging datasets now fully support 3-D surveys.

5.2.2 Data Collection Design

Certain rules of thumb for survey design can guide implementation of the resistivity method. There is an inherent tradeoff between resolution and imaging depth (or imaging distance away from a borehole). Generally, the best possible image resolution is approximately equal to the electrode spacing. This optimal resolution only exists in the shallow subsurface below the electrodes as resolution decreases greatly with distance from the sensing electrodes (with depth below a surface array). The maximum investigation depth depends on the total length of a 2-D ERI survey. If the sequence of four electrode measurements to acquire is well designed and includes four electrode combinations with large electrode spacings, the maximum imaging depth can be roughly estimated as some fraction (for example, 0.75) of the total survey length. This estimation typically assumes a homogenous earth and underscores the need for caution when making assumptions dependent on unknown resistivity distributions.

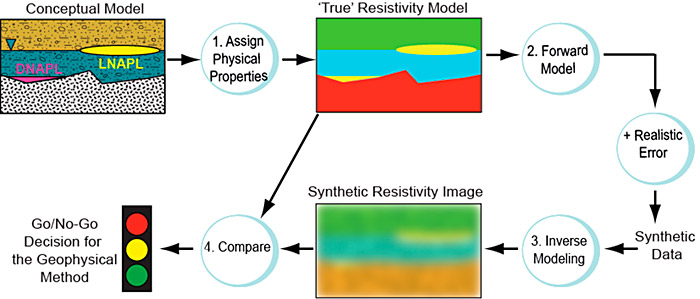

Developing a resistivity conceptual model provides a better way to assess image resolution and investigation depth. First, a synthetic resistivity distribution is developed based on the CSM (see Figure 5‑3). All available information on the subsurface geology and target of interest is used to develop the model. Educated guesses are usually required when assigning probable resistivity values for targets of interest and geological units. These guesses are constrained by data from available borehole logs, groundwater geochemical data, and tabulated values of typical resistivities for different geological materials. A forward model calculation generates a synthetic resistivity dataset contaminated with representative noise for the site so that it simulates the data acquired from a true field survey. Then the model is inverted (see Figure 5‑3) to assess which survey design provides the most information to meet the project objectives. A geophysical contracting company experienced in resistivity imaging can perform these simulations and these simulations should be part of a competitive bid for a contract. The scenario evaluator for electrical resistivity (SEER) is a technology-transfer tool developed by the USGS in collaboration with Rutgers University Newark (Terry et al. 2017) that can be helpful when evaluating the likely effectiveness of a resistivity survey for a specific application. SEER is packaged as an Microsoft Excel spreadsheet that can be downloaded from the USGS Scenario Evaluator for Electrical Resistivity (SEER) Survey Pre-Modeling Tool webpage (USGS 2018c).

Figure 5‑3: Workflow to evaluate the likely effectiveness of a resistivity survey for a specific application.

Source: Rutgers University Newark, Lee Slater, Used with permission

In the example shown in Figure 5‑3, the conceptual model is for unconsolidated sediments overlying bedrock, with hypothetical plumes of DNAPL and LNAPL. The workflow tests the likely resolution of the subsurface based on assigned electrical properties for the site geology and contaminants. Based on assumed electrical properties, the water table in the unconsolidated sediments and the bedrock interface is expected to be well resolved. LNAPL may be detected, the deeper DNAPL is not resolvable. The example highlights the inherently low resolution of resistivity imaging (Day-Lewis et al. 2017).

5.2.3 Data Processing and Data Visualization

Inverse methods are used to process field resistivity datasets to compute a subsurface resistivity distribution that is consistent with acquired measurements. Typically many more parameters than measurements are included the subsurface resistivity model. As a result, inverse models generate nonunique solutions to the resistivity structure. Additional constraints on the model structure are included to favor certain models or images over others to narrow the plausible resistivity structure further. By far the most common constraint is called smooth inversion or smoothness-constrained inversion because it produces models with smooth variations in physical properties (deGroot-Hedlin and Constable 1990).

Inverse methods involve an iterative approach in which a mathematical model is used to minimize the difference between the field measurements and the simulated values based on a numerical-forward model for a synthetic structure of the Earth. Finite difference or finite element models are used in the numerical-forward modeling. Iterative parameter updates continue until these differences are less than a specified value defining a convergence criterion. At this point, the estimated model is considered a plausible representation of the resistivity structure of the subsurface. Because an inexperienced user can easily abuse it, the processing should be done by an experienced practioner. To ensure the representation is plausible, experts use a variety of methods to assess the quality of the input data and information content of the inverted image and identify red flags. Unfortunately, geophysical contractors may not always use image appraisal tools to assess the realistic information content in the inverted images. Contractors should provide evidence of image appraisal as part of the delivery of ERI results.

ERI data interpretation is best done as part of a multitechnology approach to refining site conceptual models. Interpretations of ERI survey images should be constrained by other information available at site. Interpretations of resistivity images presented by a contractor should never be simply accepted without careful consideration of whether the interpretation is consistent with existing site information. Used appropriately, the spatially rich information provided from an ERI survey significantly helps CSM refinement.

5.2.4 Quality Control

Numerous QC checks should be performed during the acquisition of field resistivity datasets. The repeatability of the recorded voltages in response to an injected current should be assessed from repeating individual measurements multiple times. A much more robust quantification of the error in the field measurement is achieved from a ‘reciprocal’ measurement, whereby the electrode pairs being used for current injection and the electrode pairs being used for voltage measurement are switched over. The principle of reciprocity states that the normal and reciprocal measurement must be identical. Differences in the normal and reciprocal measurement provide a rigorous estimate of the measurement error (Slater et al. 2000). These errors depend on multiple factors, some of which can be addressed during the field survey. One factor is the contact resistance between the electrodes and the Earth. This resistance limits the flow of current into the ground and consequently limits the magnitude of the recorded voltages. Poor contact resistances result from resistive ground, poorly placed and inserted electrodes, and bad contacts between the electrode and the resistivity instrument. Most resistivity instruments allow the user to assess contact resistances and correct issues prior to the start of a survey. Naturally high-contact resistances resulting from the ground conditions can be reduced by watering the electrodes.

Poor data quality also results from buried infrastructure, particularly electrical utilities. The site should be carefully evaluated for the presence of buried utilities, particularly at brownfield sites or within industrial complexes. Identifying the location of utilities helps to isolate anomalous structures in the resistivity images caused by such infrastructure.

Accurate recording of the electrode locations is another important QC consideration. Electrodes are typically put out with the intention of uniform spacing, which is sometimes prohibited by site conditions. When site conditions dictate that the electrodes be offset from the nominal spacing, exact electrode locations should be recorded. Incorrectly documented electrode locations result in model errors during inversion that manifest as artificial image structure at shallow depths close to the electrodes. The topography also should be recorded when the land surface deviates significantly from horizontal. Slopes greater than 10% should be documented and resistivity data modeling should account for the steeper topography. All modern ERI software has the capability to account for topography during inverse modeling.

QC should also be considered during data processing. A correct estimate of the errors in the measurements s necessary to accurately weight the relative importance of the different measurements that the resistivity structure. Unlike errors determined from repeatability tests, reciprocal measurement errors can identify systematic errors that are not apparent from stacking.

As previously noted, image appraisal is important in assessing the significance of the estimate of the resistivity structure; a measure of the depth of investigation to identify well-resolved regions of the subsurface is important. An additional simple but often overlooked QC consideration during the data processing stage is the measure of misfit between the measured field data and the synthetic data determined using the estimated model for the resistivity structure. If this misfit criterion is not adequately minimized, the resulting image should not be considered the best possible estimate of subsurface resistivity.

5.2.5 Limitations

Despite the wide range of ERI applications, some practitioners make overly bold claims that resistivity is a direct NAPL detection tool. Although it is reasonable to assume that a new spill of electrically resistive NAPL forming a continuous, sufficiently thick layer may be detectable, ERI is highly unlikely to directly detect an aged NAPL spill in the subsurface. More accurately, the NAPL biodegrades and results in resistivity signatures (Atekwana and Atekwana 2010). Another example signature is the ionic concentration increase in the porse fluid that results from mineral surface weathering in the presence of organic acids generated by microbial degradation of LNAPL. Finally, resistivity can appear to correlate with LNAPL and DNAPL distribution because of mutual dependence on low-permeability zones. The low permeability materials that tend to lock up NAPL (and act as long-term, sources of contamination driven by back diffusion) are typically fine-grained materials that are electrically conductive and easily resolvable in electrical images.

The relatively low resolution of ERI (see Figure 5‑3) is a major limitation that tends to be overlooked; structure smoothing is necessary to obtain a meaningful image. Although ERI can often resolve some degree of subsurface heterogeneity, heterogeneity at a scale below the resolution of the imaging may go undetected or exert strong influence on flow and transport not incorporated into the CSM. It is important to recognize that resolution decreases dramatically with distance below the surface (when electrodes are at the surface) so the best possible resolution only applies to the first layer of the Earth with a thickness equal to approximately half the electrode spacing. Claims of high resolution (a few meters) or better at depth (beyond the top 5 m to 10 m) are suspect because they are inconsistent with the physics of the method. In addition, images that show a lot of structural variability at large distances from the electrodes are suspect because resolution with this method decays substantially with depth. The structural variability results from the inversion of noisy datasets and inappropriate constraints placed on the model structure. Similarly, images containing strong lateral contrasts at depth (vertical contacts in the images at depth) are most likely an artifact resulting from modifying the inversion settings to favor rough-image structure.

Another common error in the interpretation of resistivity images from a standard 2-D survey is that (in this case) the inversion routine solves for a 2-D subsurface structure; no variation in resistivity is assumed in the direction perpendicular to the image. Although this is a reasonable assumption in some cases (for example, transects perpendicular to a pipeline or across a valley filled with sediments deposited in a low energy environment), 2-D imaging is often done in complex 3-D terrain (for example, over karst) where the 2-D assumption is not valid. In this case, the image produced cannot be expected to represent reality and will contain image artifacts because 3-D structures are being represented by a 2-D model.

5.2.6 Cost

The cost of a ERI survey depends on the complexity of the survey and site conditions. A standard 2-D resistivity imaging survey can be performed by two people in the field. A two-person crew may be able to advance over 300 electrode locations in a day under good site conditions. Productivity declines considerably in the presence of more complex terrain. A resistivity survey is expected to cost $2,000 to $4,000 per day for field data acquisition. Data processing costs depend on the complexity of the survey; a reasonable assumption is half a day of data processing time for every day of field data acquisition.

5.2.7 Case Studies

Examples of how ERI can be applied are provided in the following case studies:

- ERI Provides Data to Improve Groundwater Flow and Contaminant Transport Models at Hanford 300 Facility in Washington

- Surface and Borehole Geophysical Technologies Provide Data to Pinpoint and Characterize Karst Features at a Former Retail Petroleum Facility in Kentucky

5.3 Ground Penetrating Radar

GPR is a high-resolution EM technique that has been developed over the past 40+ years to investigate the subsurface in environmental, engineering, and archaeological investigations (Daniels 2000). GPR is a widely accepted imaging and characterization tool. Its effectiveness depends strongly onsite conditions. Similar to navigational radar, GPR identifies the location, shape, and size of subsurface features based on the reflection of high frequency [10 to 1,500 megahertz (MHz)] EM pulses or waves transmitted from a radar antenna (Daniels 2000); (USEPA 2016d).

Due to the wide range of frequency antennas that can be used, GPR is capable of a high degree of lateral and vertical resolution that often exceeds that of other surface geophysical methods, (ASTM 2011a). Large areas can be covered quickly in the field using GPR. When applied in the right setting, GPR is highly efficient and a reliable source of high-quality 2-D and 3-D data. The real-time capability of GPR allows a geophysicist to interpret the results qualitatively to evaluate site conditions and adjust the field program as needed (NJDEP 2005).

GPR detects changes in EM properties in the subsurface, such as dielectric permittivity, conductivity, and magnetic permeability, that are a function of soil and rock properties, water content, and bulk density (ASTM 2011b). Dielectric permittivity (also known as dielectric constant) is a measure of the ability of a material to store an electric charge by separating opposite polarity charges in space; it effectively measures a material’s ability to be polarized by electric displacement due to an electric field and has units of farads per meter (F/m) (ASTM 2011b). It is more convenient in practice to use relative dielectric permittivity, which is the ratio of the dielectric permittivity of a material to the permittivity of a vacuum and is a dimensionless term (Annan 2003). The relative dielectric permittivity of geological materials varies from 1 for air to a maximum of around 80 (depending on the temperature) for water. Solid minerals or rocks have a low relative dielectric permittivity (less than 10). The relative dielectric permittivity of sediments and rocks varies widely depending on the porosity and the water content. Low-porosity rocks and dry unconsolidated sediments (for example, hydraulic conductivity whereas porous rocks and saturated sediments have high relative dielectric permittivity (Everett 2013); (USEPA 2018b). Most geophysics textbooks include tables where representative values of hydraulic conductivity are published for different geological materials; actual values strongly depend on porosity and water content.

GPR senses variations in the hydraulic conductivity, which controls the velocity at which EM waves travel between a transmitter and receiver. The arrival time of the transmitted wave recorded at the receiver is usually stated in Ns (10-9 sec) (Daniels 2000); (USEPA 2016d). EM waves travel at 0.3 m/Ns (speed of light) through the air and less than 0.3 m/Ns through geologic media (Daniels 2000); (USEPA 2016d). Variations in the subsurface result in reflected EM waves that are returned to the instrument from interfaces or irregularities with hydraulic conductivity contrasts. Abrupt variations in water content and porosity are usually the primary cause of reflections returned to the instrument. The returning portion of the EM wave is captured by the receiving antenna where its amplitude, wavelength, and two-way travel time are recorded for processing and interpretation (Dojack 2012). Short EM wavelengths (high frequency) yield a high resolution of interfaces and objects (Daniels 2000).

GPR is most often a shallow subsurface tool (for example, 100 ft or less) due to the many variables that affect EM penetration, reflection, and scattering. GPR service providers should be equipped with multiple antennas of different frequencies to adjust for site-specific conditions, if needed. As an example of the relationships between antenna frequency, resolution, and penetration depth, a 100-MHz antenna can penetrate up to 60 ft in a clean, dry sand setting and have a resolution capable of identifying a 2-ft pipe at 20 ft. In comparison, a 1,000-MHz antenna can penetrate to only 6 ft in a clean, dry sand but resolve shallow wire mesh and a 3/16-inch hose at 6 ft (see US Radar Inc – Ground Penetrating Radar FAQ website (USRadar 2019)). An inherent tradeoff between penetration depth and resolution must be evaluated critically and is discussed in greater detail in Section 5.3.4.

5.3.1 Use

GPR equipment typically consists of a radar-control unit, transmitting and receiving antennas, electronics, and recording devices (see Figure 5‑4). The transmitting and receiving electronics are kept separate (Daniels 2000). The radar-control unit sends synchronized trigger pulses to the transmitting and receiving antennas at up to thousands of times per second (USEPA 2018b). The antenna is typically shielded to maximize the energy path to and from the subsurface target to reduce the energy transfer between the transmitter and receiver as well as into the air and to minimize external noise from interferences (Annan 2009). The system is digitally controlled, and data are digitally recorded for processing and display (Daniels 2000). The GPR system contains a microprocessor, internal memory, and data storage.

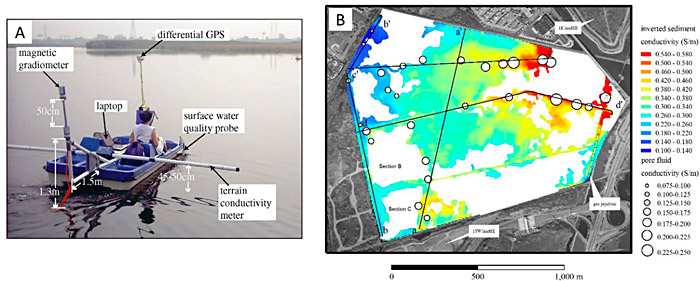

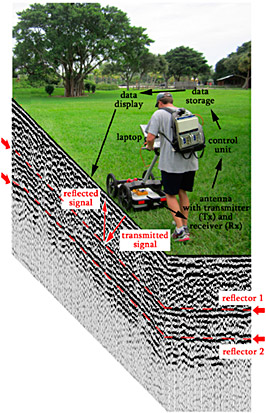

Figure 5‑4. GPR implementation involves a pulse of EM energy traveling from a transmitter (Tx) to a receiver (Rx) antenna and contrasts in hydraulic conductivity return a reflection in the GPR record.

Source: Florida Atlantic University, Used with permission

Reflection profiling is the most common type of GPR deployment. Radar waves are transmitted, received, and recorded each time both antennas have been moved a fixed distance across the ground surface (USEPA 2018b). GPR is often deployed along linear transects. The number of transects is a function of the anticipated propagation of the EM wave in the geologic environment. The transects are completed in one direction and then an additional set is completed in a perpendicular direction to improve resolution since the reflected EM pulses are only acquired in the narrow band directly below the transect and could miss objects aligned parallel to the transects. Compiling responses along each transect line enables a clearer interpretation of the subsurface along a profile.

GPR applications in geologic studies include identifying the depth to bedrock, depth and thickness of soil strata, depth to the water table, and location of cavities or fractures in bedrock (USEPA 2016d); (USEPA 2018b). GPR applications also include locating subsurface structures such as pipes, drums, waste and landfills, tanks, cables, trenches, pits, fill material, unexploded ordnance (UXO), and munitions. GPR may be used for QC testing of soil surveys or on road paving and asphalt projects (Annan 2009). In addition, GPR may be able to detect subsurface contamination provided the contaminant changes the dielectric permittivity to a detectable degree (for example, above background noise in the data). For example, the relative dielectric permittivity of a common organic solvent, trichloroethene, is 3.42 compared to 1.0 for air and 78 for water at 25°C; if such a contaminant displaces water sufficiently in the subsurface, a distinct change in the relative dielectric permittivity occurs for this region of the subsurface (Knight 2001). Similarly, the presence of an immiscible phase of liquid, such as NAPL, may provide an even greater change in the dielectric constant than it would as a dissolved phase in the same media. When a pure NAPL displaces pore water, there is a reduction in conductivity and dielectric permittivity in the media which translates to an increase in the EM wave velocity and decrease in attenuation (Redman 2009). However, in practice, NAPL identification with GPR is typically limited to recent releases that exhibit little weathering of the NAPL via biodegradation or other chemical interactions.

GPR works best in dry, coarse-grained materials such as sand and gravel. High‑conductivity soils and saltwater applications are highly detrimental to GPR application because of the high likelihood of EM attenuation (ASTM 2011b). Because clayey fill (high conductivity) is frequently present in the shallow subsurface at most industrial sites, penetration depths may be less than 30 ft (about10 m) for most environmental investigations (USEPA 2016d).

GPR equipment and software are complex and technical training is needed for proper use, data filtering and processing, and interpretation. Training is particularly important for environmental applications of the technology; GPR manufacturers provide integrated tools for utilities detection that are often operated by a less experienced operator. GPR services should be contracted to specialty providers with the proper training and experience; the end user is advised to be wary of contractors who treat GPR as a “black box” (where data goes into a computer and generates results with no processing or data interpretation). Users of GPR data should seek references and project examples from potential GPR providers to vet their experiences and backgrounds. Project objectives and GPR capabilities should be established with the contractor early in the project based on conditions at the site. The GPR contractor should provide input on the advantages and limitations of GPR for the site. GPR services are often contracted using a daily rate, particularly for short, well-defined projects.

For additional information on accepted and recommended procedures for implementing GPR, please refer to (ASTM 2011b).

5.3.2 Data Collection Design

GPR is often one of the first tools employed at a site as the CSM is being developed. Many factors at a site can attenuate the EM wave energy from GPR and, if not well understood and addressed in the investigation design, reduce the effectiveness of GPR. Three factors that should be understood prior to deploying GPR are the following:

- expected site stratigraphy and saturation data (including the types of sediments, stratigraphical order, depths, thicknesses, and water table depth) available from regional to local geologic literature and previous site characterization, if available

- presence of subsurface utilities and other potential interferences such as rebar-reinforced concrete, including their approximate locations based on aboveground indicators (for example, manholes, gas line markers), composition, depths

- aboveground access and interferences that could constrain equipment deployment and locations of potential aboveground interferences such as fences, signs, power lines

Having a solid grasp of these site characteristics is vital for the successful use of GPR.

The knowledge of the expected depth of investigation and relative suitability of soils can aid potential GPR users in refining their methods search. For example, the EC of near-surface sediments is a critical consideration as GPR is often rendered ineffective as a result of excessive signal attenuation by conductive soils (for example, clayey soils, high salinity soils).The USDA Natural Resources Conservation Service has used the soil attribute data from the State Soil Geographic (STATSGO) and the Soil Survey Geographic (SSURGO) databases to develop thematic maps showing relative suitability of soils for many GPR applications (See the USDA NRCS Ground-Penetrating Radar Soil Suitability Maps website (USDA 2009). A generalized map of the U.S. and each state (excluding Alaska) is listed and can be downloaded as a PDF. GPR suitability is rated on a scale of one to six. Some areas have not been digitized and have no data (USDA 2009). These maps are a guide and local conditions may vary.

Additional considerations include information gained by answering the following questions (Annan 2003):

- Is the target within the detection range of GPR regardless of the subsurface characteristics of the site?

- Will the target elicit a response that is detectable and discernable above background noise?

- Are multiple targets that need to be included in the study design likely to be present?

- Are there other conditions that would preclude using GPR?

A GPR study must be designed knowing the desired resolution and the depth of assessment that can be reliably attained for that level of resolution. The EM pulse frequency is inversely proportional to signal penetration and data resolution (the lower the frequency of the EM pulse, the deeper the signal penetration but the poorer the data resolution, and vice-versa). Two commonly used rules of thumb for evaluating the resolution of GPR are (1) the resolution may be approximated as the maximum depth of the investigation divided by 100 and (2) GPR will be ineffective if the actual target depth is greater than 50% of the maximum depth of the radar (Annan 2003). Additionally, when detecting subsurface utilities, the diameter of a conduit capable of detection is 1 inch per foot of burial (for example, minimum 4-inch-diameter conduit located 4 ft below ground surface).

GPR data may be collected using two modes: moving and fixed. In the moving mode, the transmitter and receiver are maintained at a fixed distance and are moved together along a line to produce a profile (Daniels 2000); (USEPA 2016d). The size of the target and survey objectives must consider the spacing between the traces received by the equipment (Daniels 2000). This mode offers the advantage of rapid data acquisition (See Figure 5‑4 and Figure 5‑5). In fixed mode, the transmitting and receiving antennas are moved independently to different points to make discrete measurements. The EM wave is transmitted, the receiver is turned on to receive and record the signals, and then the receiver is turned off (Daniels 2000). The measurements recorded create a trace, a time history of the travel of an EM pulse from the transmitter to the receiver. This mode is more labor intensive but provides greater flexibility than moving mode.

5.3.3 Data Processing and Data Visualization

Collected GPR data require considerable iterative postprocessing to improve data quality prior to interpretation. This postprocessing is performed with specialty software and should be conducted by a competent and trained practitioner. Because advanced data processing often includes a higher degree of interpreter bias, it is important to discuss the assumptions made by the practitioner processing the data (Annan 2009).

The GPR trace recorded must be converted from two-way travel time to a depth. The travel time is a record of the changes in amplitude of the EM wave as it travels through the subsurface and encounters reflectors that send the wave back to the ground surface (see Figure 5‑4). The propagation velocity of the EM wave is calculated by dividing the propagation velocity of an EM wave in a vacuum (3 x 108 m/s) by the square root of the dielectric permittivity of the subsurface materials (obtained from literature sources). Once the propagation velocity has been estimated based on published values or professional judgment, it is multiplied by the measured two-way travel time to determine the equivalent depth (ASTM 2011b).

The objective of GPR data presentation is to depict a visual image that approximates the subsurface and position of any anomalies. Oftentimes, GPR results include subtleties that do not produce sharp boundaries. These subtleties often represent gradational boundaries that can complicate GPR data interpretation. Examples include heterogeneous fill materials or the water table (Annan 2003). These subtleties in data interpretation should be carefully considered. A significant amount of data filtering is required to produce usable and unbiased GPR images of subsurface data. Corrections to the recorded EM wave are made to account for the effects of attenuation due to geometric spreading, removal of low-frequency energy that subsequently distorts the recorded waveform, and topography. More sophisticated processing includes a step known as migration to correct the location of reflections coming from interfaces that are not vertically below the instrument. Further details can be found in (Annan 2009).

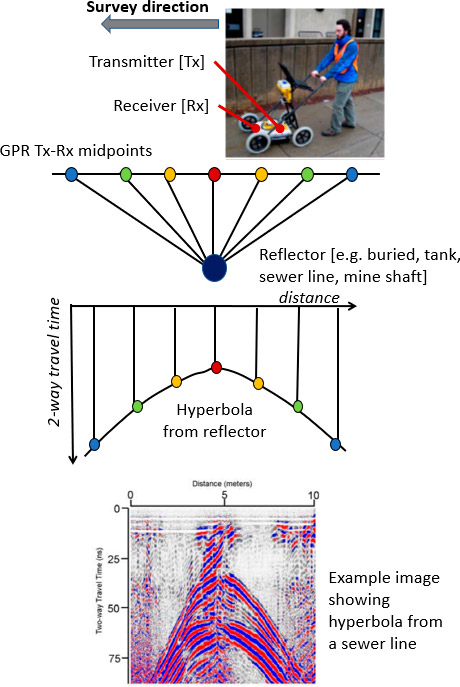

GPR displays are most often presented as 2-D cross sections showing travel times for reflection events. These plots depict distance at ground surface on the x axis versus depth travel time (surrogate for depth below ground surface) on the y axis. An example of a 2-D image illustrating the location of a sewer line is shown in Figure 5‑5. This figure also shows how dragging the GPR across a localized target (such as a sewer line) results in a characteristic hyperbola in the reflection record.

Figure 5‑5. Schematic of a GPR survey of buried tank.

Source: Rutgers University Newark, Lee Slater, Used with permission

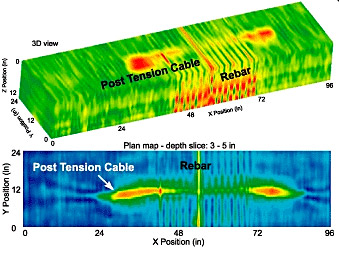

Another useful representation of GPR datasets is a 3-D block diagram showing relative amplitudes of EM energy at different levels or slices. These block diagrams are useful for identifying and isolating features of interest but can be more challenging for nontechnical reviewers to understand. For a 3-D image to be useful, the amplitude color ranges should be assigned thoughtfully with a minimum number of colors. The viewing angle is another important visualization aspect to consider. A comparison of 2-D and 3-D images for the same target is shown in Figure 5‑6.

Figure 5‑6. 3-D GPR block view of response contrast for metallic objects in relation to 2-D horizontal slices.

Source: (USEPA 2016d)

5.3.4 Quality Control

QC procedures apply to GPR field procedures and data processing and interpretation activities. In the field, test lines are used to obtain the system settings that are optimal for the site. Field logs should be maintained to document system settings and field procedures and changes to either. Site conditions at the time of the survey (weather, ambient conditions) should be recorded because they can influence test data. Equipment calibration is usually performed in accordance with manufacturer’s specifications. Operational checks should be performed prior to use and throughout the day as a periodic check. Corrective actions taken to fix equipment or troubleshoot problems should also be recorded. Data should be reviewed as quickly as possible in the field so that scans can be rerun if necessary. It is good practice to rerun the test line to confirm proper system operation (ASTM 2011b).

5.3.5 Limitations

GPR has depth limitations depending on the contrast of electrical properties of the geological formations and the variability in signal attenuation. These factors result in signal losses due to EC, the dielectric constant, and scattering (ASTM 2011b). The effectiveness of a GPR survey is strongly dependent on the rate of attenuation of the EM energy as the signal propagates into the earth. At some distance, the attenuation is so excessive that the returned signal is no longer detectable above the EM noise levels. The attenuation of EM waves is largely from EM energy converting to thermal energy, an effect that increases with the EC of soil, rock, and fluids (USEPA 2018b). The attenuation rate determines the penetration depth of a GPR instrument at a site. In rocks and soil, the degree of saturation, content of pore fluid (for example, free ions in solution), clay mineralogy, and soil types have a strong influence on the bulk material’s conductivity and ability to transmit an EM wave (ASTM 2011b).

Unfortunately, site conditions often preclude optimal performance of GPR. The ionic strength of site groundwater and thickness of clay deposits reduce signal-penetration depth. In general, signal penetration is best in dry, sandy, or rocky soils and worse in moist, clayey, and conductive soils (USEPA 1993). Signal attenuation can be 3 ft (1 m) or less in conductive materials such as seawater, mineral-rich clays, or metallic materials. Conversely, penetration depths have been reported up to 100 ft in water saturated sands to nearly 1,000 ft in granite (ASTM 2011b). In a quartz sand-rich environment with the water table at 15 ft, depths of 20 ft are easily obtainable.

Similarly, the presence of surface and buried conductive (metallic) debris on a site can severely hamper subsurface investigations. Radar waves are predominately attenuated by EC (Everett 2013). Reinforcing rods in concrete, buried railway tracks, and even metal underground tanks whisk radar wave energy away leaving little energy for the required detection of reflections.

Finally, accessibility issues on a site can limit effective GPR deployment. GPR equipment must be physically moved across the study area. Although the equipment is portable and often pushed manually by one person via a cart comparable in size to a shopping cart (see Figure 5‑5), heavily wooded sites, congested areas, or heavily sloped areas can limit GPR. Air-wave interferences are also problematic because they reflect the EM signal, which is then captured by the receiver. Air-wave reflections can occur from overhead power lines, telephone poles, walls, fences, vehicles. (Annan 2003).

5.3.6 Cost

Estimates for GPR surveys are typically quoted on a day-rate basis. A typical GPR survey for the purpose of searching for USTs or performing utility surveys, can cost about $3,000 for 1 acre to 1.5 acres. GPR surveys geared to locate subsurface voids may require tighter line spacing so the cost increases to approximately $3,500 for 0.5 acres. These daily rates normally include costs for personnel, equipment, acquisition, interpretation, and reporting.

The number of GPR personnel needed to run a survey depends on the type of survey. A wildcat survey (random lines) can be performed by one person; a grid survey requires at least three people). Sometimes both techniques can be used at a site. Generally, a wildcat survey is used to identify a more specific area of interest where a grid survey is performed. Mobilization and per diem costs are based on the location of the site and the distance from the contractor’s office.

5.3.7 Case Studies

Examples of how GPR can be applied are provided in the following case studies:

- Surface and Borehole Geophysical Technologies Provide Data to Pinpoint and Characterize Karst Features at a Former Retail Petroleum Facility in Kentucky

- GPR Data Show Location of Buried Debris and Piping Associated with a Former Gas Holder in Minnesota

5.4 Seismic Methods

Seismic exploration methods are commonly used to determine site geology, stratigraphy, and rock quality. These techniques provide information about subsurface geology, and they make use of the properties of sound waves as they propagate through earth. The methods are based on the fact that geologic materials have different acoustic impedance (the product of density and seismic velocity). This difference results in body waves that reflect or refract at the interface of two strata, and surface waves that disperse (spread out) as they travel across the surface of the Earth.

Seismic reflection and refraction techniques measure the travel time of two types of body waves from surficial shots (an energy source, usually in the form of a sledgehammer striking a metal plate flat on the ground). The primary wave (P wave) and the secondary wave (S wave). The P wave, also called compression wave, is the fastest traveling wave and, consequently, the first to arrive to a strata boundary. The S wave, also called the shear or transverse wave, travels slightly slower than the P wave and is produced by the conversion of a P wave at a boundary. Seismic-wave velocities depend on the elastic moduli and the densities of the geologic material in which the wave travels through. Elastic moduli are a set of constants that define how a material responding to stress deforms, then recovers to its original shape after the stress stops. For example, a sound wave travels at a speed of 300 m/sec to 800 m/sec through unconsolidated sand while traveling at a speed between 4,600 m/sec to 7,000 m/sec through granite.

MASW is a seismic technique that uses surface waves (specifically Rayleigh waves) from a similar energy source (for example, sledgehammer striking a metal plate) to determine shear-wave velocity (Vs) profiles in the subsurface. The basis of this technique is the dispersive characteristics of surface waves when traveling through a layered medium; different wavelengths propagate with different velocities depending on their penetration depth. There are two types of MASW techniques (passive and active); active MASW is the most common and is discussed in this section.

Applications for seismic methods include mapping depth to bedrock and bedrock topography, mapping depth to groundwater, identifying and mapping faults, identifying geologic settings for NAPL transport, mapping stratigraphy, and mapping subsurface geologic structures. In addition, MASW is commonly used for mapping variation in soil stiffness (Vs is a direct indicator of ground stiffness) and identifying low-velocity anomalies (for example, karst terrain, voids and sinkholes, severely weathered bedrock, waste pits, and surface bedrock features).

Although seismic methods (especially reflection) can be more expensive than other geophysical methods, they are cost effective for the information they provide compared to nongeophysical intrusive methods. Equipment is readily available, portable, and nonintrusive, and seismic measurements have good resolution and provide relatively rapid coverage of a large area.

5.4.1 Use

A linear array of geophones (a recording device used to convert velocity into voltage) is typically established along the ground surface. Surficial shots (a seismic energy source, usually obtained by hitting a metal plate placed flat on the ground with a sledgehammer) are generated to provide a seismic wave in the form of a strong vibration that is recorded by the geophones. Shots are fired, in turn, at each geophone, and active geophones are progressively added ahead of the shots in a roll-along fashion. The geophones receive and transmit data to a data processing unit where software algorithms process the signal. The data processed are used to produce an image of the subsurface stratigraphy (Sheriff and Geldart 1995).

5.4.1.1 Multichannel Analysis of Surface Waves

In this method, the ground is struck multiple times with a sledgehammer to enhance the amplitude of the surface wave and minimize background noise. This process is called vertical stacking. Data are recorded and analyzed via dispersion and inversion analysis.

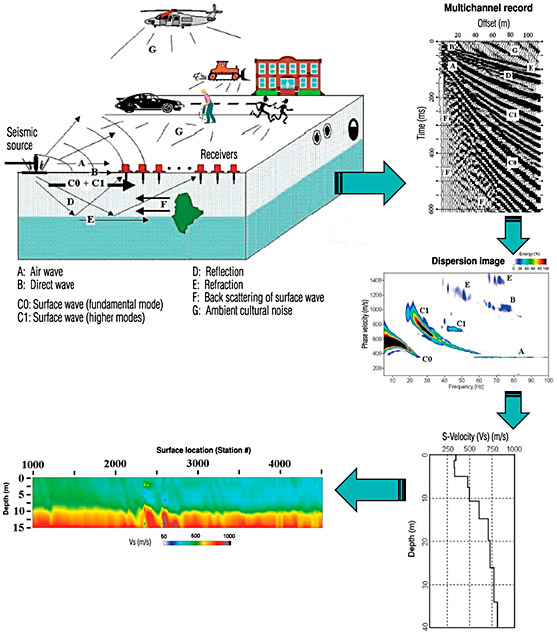

Because higher frequency signals are mostly refracted energy and refracted energy noise, low-frequency geophones are needed; low-frequency, vertically-polarized geophones are commonly used to record surface wave data because they can record extremely low-frequency data and attenuate higher frequency signals. The desired surface-wave energy to analyze in MASW usually ranges between 5 Hz and 40 Hz. Figure 5‑7 illustrates an overview of the method, including:

- data acquisition and the multichannel record obtained (note that it contains multiple frequencies despite looking like only one)

- dispersion image generated from each record along with its extracted dispersion curve

- one-dimensional shear-wave velocity profile that is back calculated from the curve

- final 2-D shear-wave velocity profile map produced using an appropriate interpolation method

Figure 5‑7. Overall procedure and application of MASW.

Source: Modified after (Park et al. 2007), Used with Permission

5.4.1.2 Reflection

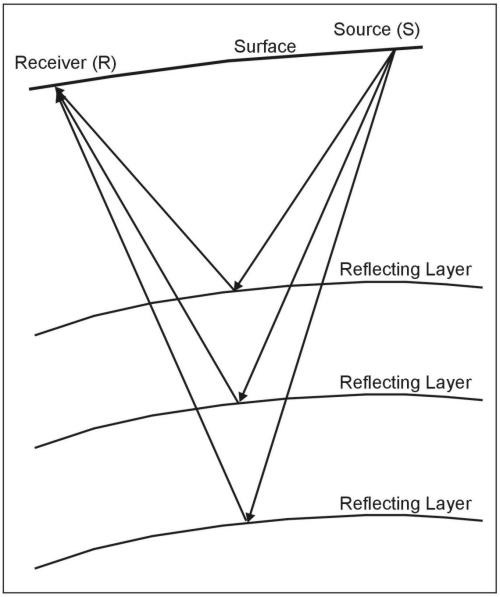

Reflection surveys use geophones to record the arrival of the P waves after they have reflected from a subsurface horizon. As the first seismic wave (the P wave) generated by the shot source (for example, sledgehammer) travels through the geologic media, it propagates in three different ray paths. One path is along the ground surface (direct ray). The second path is represented by the reflected wave. The reflected wave consists of the wave that travels down to the interface layer, hits the interface, and travels upwardly from the refracting layer (reflected ray). The third path (critically refracted ray) is represented by the refracted wave, which has three legs. The first leg travels downwardly across the first layer and with an off-set x from the downward reflected ray, hits the interface, and produces the second leg of the refracted wave that travels along the second layer (at the speed of the lower layer). The third leg travels upwardly through the refracting layer or refractor by critical refraction). A simplified diagram of the method is shown in Figure 5‑8.

Figure 5‑8. Schematic of the seismic reflection method.

Source: (USEPA 2016a)

5.4.1.3 Refraction

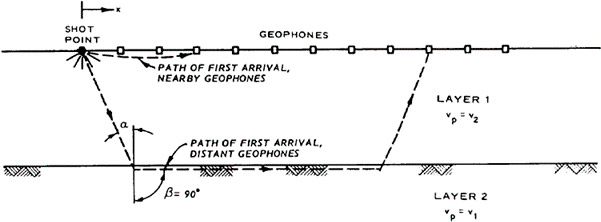

The seismic refraction technique analyzes the refracted wave along the different stratigraphic interfaces by recording the signatures on each geophone in the spread implanted in the ground. Ray paths go downward to the boundary, are refracted along the boundary, and return to the surface. The recorded signal response is plotted and processed as a function of the distance (off set) from the source. The processed signal produces a cross section of the seismic wave velocity, which, in turn, conveys information about the geological stratigraphy (Sheriff and Geldart 1995); (Council 2000). The seismic reflection technique generates an image of the subsurface impedance contrast for the area investigated. The image provides data related to the distance and travel time of the reflected wave (Council 2000). Figure 5‑9 provides a schematic of a seismic refraction survey. First arrivals near the shot have paths directly from the shot to the detector. Rays traveling along the boundary are the first to arrive at receivers (geophones) away from the shot because the lower material has a higher velocity (V2 > V1).

Figure 5‑9. Seismic refraction schematic.

Source: (USACE 1995)

5.4.2 Data Collection Design

The maximum depth and resolution of the data depend on the energy and frequency of the initial pulse and arrangement (geometry) of the geophones. Factors to consider which energy source to use include:

- its energy output (the stronger it is, the better the signal to noise ratio), for most environmental work, the best possible source is a 14 or 16 lb sledgehammer

- frequency content (higher frequencies offer high resolution but decay more quickly than lower frequencies; this lowers the depth of investigation)

- a source should allow for stacking measurements to improve the signal to noise ratio (it should be repeatable)

- the source signature should be well-defined (the wave energy should be known exactly) to significantly enhance the imaging quality (GPG 2017).

Common geometries of survey designs include multichannel and common midpoint (CMP) (GPG 2017). A multichannel design can be a split spread, which has a central shot with receivers on both sides, or a single-ended spread where the receivers are always on one side of the source. Seismic traces belong to a single source, a common source gather. A CMP design uses multiple shots and receivers in specifically so that some subsurface points are sampled more than once. The goal is to identify all reflections to a point on various profiles and stack them to get an enhanced signal-to-noise ratio. The degree of multiplicity from a particular location is known as fold. For example, a 24-channel seismograph is commonly used to gather 12-fold data.

Additional general considerations for survey design are as follows (GPG 2017):

- Seismic reflection surveys are typically designed to detect a reflection from a particular subsurface point multiple times. This approach improves the ability to detect and image a given event because the method makes it more difficult to discern direct arrivals and sound waves traveling through the air.

- Seismic refraction surveys are most useful at sites where velocities increase with depth (for example, a sand or gravel layer overlies a clay layer instead of a clay layer overlying a sand or gravel layer).

- The depth of penetration is approximately one-fifth of the length of the geophone spread, including off-set shots. For example, if the depth of investigation must be 10 m, at least 50 m (measured from off-set shot to off-set shot must be available for the survey (GPG 2017).

- Vertical resolution for seismic data is how thin a layer can be before the reflections from its top and bottom become indistinguishable. Vertical resolution is dependent on the signal wavelength, which is dependent on the frequency and velocity of the material). The theoretical minimum thickness is ¼ wavelength (for example, careful processing could extract the pulses if they overlap by half a wavelength) (GPG 2017).

- For MASW investigations, the resolvable depth of investigation that can be obtained with the highest accuracy is related to the receiver spacing. The receiver spacing should be greater than 30% of the investigation depth but not exceed the investigation depth (MASW 2018). In practice, the length of spread is usually limited in the range of 10 ft to300 ft and the maximum depth of investigation is less than 150 ft. Note that surface waves generated by most seismic sources are attenuated and are not useable at the end of a long receiver spread because they become noisy (Park, Miller, and Xia 1999).

- For MASW investigations, topography influences the quality of the field measurements; surveying should be conducted on relatively flat ground for best results. The overall topographic variation might not affect the data, but the ground should be nearly flat, at least within the receiver span (Park 2006).

5.4.3 Data Processing and Visualization

Qualified specialists typically perform data processing. These individuals should have all available information (for example, well logs, known depths, results from ancillary methods, and the expected results).

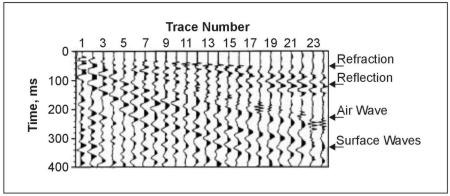

The data from seismic surveys are usually plotted on time distance graphs and as a profile of stacked data of distance versus time. Most seismic instrumentation is capable of drawing a vertical cross section through the ground, or profiles, that are a layer-cake representation of depth to acoustic boundaries (stratigraphic horizons) and types of acoustic anomalies. Figure 5‑10 provides a seismic reflection record. The receivers are arranged to one side of a shot, which is 15 m from the first geophone.

Figure 5‑10. Simple seismic reflection record.

Source: (USEPA 2016a)

Data processing consists of three primary activities: deconvolution, CMP stacking, and migration. Deconvolution restores a waveshape to its form before filtering. CMP stacking uses a stack of common midpoint to gather traces. Migration performs an inversion so that reflections and diffractions are plotted at their true locations (Yilmaz 2001).

5.4.3.1 Seismic Reflection

The essential steps to process seismic reflection data (Baker 2019) are as follows:

- Obtain common source-point gathers.

- Sort into CMP gathers. (Reflection events appear as hyperbolic trajectories, and the goal is to stack them to a single trace.)

- Perform a velocity analysis for each event to find the stacking velocity.

- Perform a normal moveout correction and stack to yield a single trace corresponding to a coincident source and receiver.

- Composite the traces into a CMP-processed section.

These techniques can be expensive due to software cost and the need for a qualified specialist to interpret the results, but technically robust and excellent results can be achieved. An important outcome of the processing is obtaining a true depth section. This requires conversion of the times of the reflections to depths by derivation of a velocity profile. Well logs and check shots are often necessary to confirm the accuracy of this conversion.

5.4.3.2 Seismic Refraction

The generalized reciprocal method (GRM) is typically used to acquire, process, and interpret seismic refraction data. GRM is an interpretation method designed to accurately map undulating refractor surfaces from in-line refraction data using both forward and reverse shots. The method is related to the Hales and the reciprocal seismic refraction interpretation methods. In this method, it is crucial to acquire at least seven shots per spread. Assuming a 24-channel acquisition system, one center shot is required between geophones 12 and 13, one shot between each of geophone pairs 6 and 7 and 18 and 19, a near-off-set shot from each end geophone (geophones 1 and 24), and one far-off-set shot from each end of the spread. In practice, often 13 shots are used per spread for high-resolution investigations. To map the refractor surface, an off-end shot must be located at each end of the spread so that each first arrival on the geophone spread is a bedrock arrival.

Blind zones and hidden layers may be encountered. A hidden layer is an intermediate velocity, intermediate depth layer whose thickness or velocity is such that rays from a deeper, higher velocity layer arrive at the ground surface sooner than rays from the hidden layer. Standard seismic refraction interpretation schemes yield significant errors in calculated refractor depths in the presence of blind zones or hidden layers. The GRM is the only method capable of overcoming this problem.

Data processing includes the selection of first-break arrival times, the generation of time-distance plots for each line, the assignment of selected portions of the travel-time data to individual refractors, and the phantoming of travel-time data for the target (lower) refractor. Once this preprocessing work is complete, GRM processing begins. Layer thicknesses and velocities are calculated, and a geophysical interpretation of the geological parameters is made. The end product is a seismic refraction profile that indicates the seismic layers detected, the depths to the interfaces between layers as they vary along the line, and the seismic velocities encountered.

5.4.3.3 MASW

After seismic data are uploaded into the MASW software (SurfSeis), the velocity at which each different frequency propagates is automatically analyzed. Each multichannel record is transformed from the space-time domain to the frequency domain, and a dispersion image (also called overtone image) is produced. The dispersion image shows fundamental and higher modes of the Rayleigh waves. A fundamental mode dispersion curve is then extracted from each dispersion image (Park 2006). Accurate extraction of the dispersion is the most critical step because its primarily determines the accuracy of the final shear-wave velocity profile. In a trial-and-error approach, the user can run the dispersion program several times for different phase velocity ranges and select the optimum dispersion curve. To improve the estimation of the optimum dispersion curve, multiple curves are extracted from several multichannel records and are combined into a single experimental curve.

The final step is developing the shear-wave velocity profile from the dispersion curve using an inversion process. Rayleigh waves are the product of interfering compressional and shear waves; therefore, inversion of the dispersion curve results in compressional and shear-wave velocities as a function of depth. In the inversion process, several possible soil models are estimated, and a dispersion curve model that best fits the experimental dispersion curve is selected. The theoretical dispersion curve of the models is first computed and compared to the experimental dispersion curve based on the root mean square error (RMSE) between the two curves. If the calculated RMSE is greater than the specified minimum error, the soil model is modified, and a new dispersion curve is calculated. The procedure is repeated until either the specified minimum error or the maximum number of iterations is reached. The shear-wave velocity profile of the model resulting in the best fit is the final output of dispersion-inversion analysis (Olafsdottir, Bessason, and Erlingsson 2018).

Spatial interpolation methods can be used to create a 2-D shear-wave velocity map by assigning each 1-D shear-wave velocity profile at the surface coordinate in the middle of the receiver spread used to acquire the corresponding record (Park 2005).

5.4.4 Quality Control

To ensure seismic data are properly recorded, the geophones should have the following:

- a digital converter to convert electrical signals into a time series of numbers

- the ability to start digitizing at the same time the shot is initiated

- a computer to manage input and to plot signals so that data quality can be checked visually

- in-built software to carry out initial data interpretations (GPG 2017)

To acquire data of sufficient quality, the surveyor should ensure no noise is generated at the geophones by confirming that the geophone has a good contact with the ground surface. Adding salty water to moisten the soil at the geophone location can enhance wave acquisition. In addition, ambient noise should be kept to a minimum during the survey. Avoid noise from people walking, cars, or heavy equipment movement in the proximity of the geophones.

Source offset (the distance between the source and first receiver) is an important consideration for MASW investigations because surface waves are formed at a certain distance from the source. If the first receiver is placed closer than this distance to the source, ambient noise is recorded rather than the surface waves. Source offset has been suggested to lie within the range of 25% to 50% of the receiver array length (MASW 2018); (Park and Carnevale 2010); (Park, Miller, and Xia 1999).

In addition to data-acquisition quality discussed above, the field data for MASW investigations require a number of preprocessing and processing considerations:

- Raw seismic data are converted into Kansas Geological Survey data processing format (KGS or SEG-Y), named for the Kansas Geological Survey who first developed the method. Field geometry should be correctly assigned during the data format conversion.

- Field data should be inspected, and bad records removed.

- Field data are inspected for the consistency in the alignment of surface waves in neighboring records.

- Filtering and muting are used to identify and eliminate noise.

- Sometimes preliminary data processing is performed to investigate the optimum ranges of phase velocity and frequency.

- To improve the estimation of the optimum dispersion curve, multiple curves are extracted from several multichannel records and are combined into a single experimental curve.

- Inversion of the data further reduces the uncertainty associated with the experimental dispersion curve estimates.

5.4.5 Advantages

5.4.5.1 Seismic Reflection

Seismic reflection can define sequential stratigraphy to great depths (>1,000 m or 3,281 ft), although a thick sequence of dry gravel greatly affects the depth of penetration. Depending on the application, seismic reflection can resolve layers down to 1 m (3 ft) thickness and is not affected by highly conductive electrical surface layers. Other advantages include:

- Data permits mapping of many horizons with each shot.

- The method can work regardless of velocity at depth (for example, reflection surveys are not hampered by clay layer overlying a sand/gravel layer).

- Observations can be more readily interpreted in terms of complex geology.

- Observations use the entire reflected wavefield (the time history of ground motion at different distances between the source and the receiver).

- The subsurface is directly imaged from the acquired observations.

5.4.5.2 Seismic Refraction

Seismic refraction is often used in shallow areas (less than 30 m or 100 ft) where the principal goal is to map bedrock topography beneath a single overburden unit. It also is employed to map weathered bedrock and fracture zones during water prospecting. If the velocity of the transmitting unit used with seismic refraction increases with depth, results may have to be modified or discarded. For example, a low-velocity thin-sand unit that is overlain by a high-velocity clay unit may not be resolvable with the refraction technique. Recent advances in inversion of seismic refraction data make it possible to image relatively small, nonstratigraphic targets such as foundation elements and perform refraction profiling in the presence of localized low-velocity zones such as incipient sinkholes. Other advantages include:

- Refraction observations generally employ fewer source and receiver locations and are thus relatively cheap to acquire.

- Little data processing is performed on refraction observations with the exception of trace scaling or filtering, which helps pick the arrival times of the initial ground motion.

- Because such a small portion of the recorded ground motion is used, developing models and interpretations is no more difficult than with other geophysical surveys.

5.4.5.3 MASW

MASW is a low-cost, noninvasive method that does not require heavy machinery for data measurement. Measuring geophones are placed in the ground and do not require coupling to the ground. Therefore, data are acquired relatively quickly without leaving lasting marks on the surface. Compared to seismic reflection and refraction methods, MASW is applicable in a wider range of field conditions.

The main advantage of MASW method compared to seismic reflection and refraction is that it determines stiffness profiles with far greater precision. Surface waves have stronger energy than body waves; hence, higher signal-to-noise ratios are usually obtained in surface methods (Park, D.Miller, and Miura 2002); (Gouveia, Lopes, and Gomes 2016).

5.4.6 Limitations

The disadvantages of seismic methods are in the interpretation of data, which requires ground-truthing, information from other tools, and substantial expertise to interpret. The performance of seismic methods can be significantly affected by cultural noises, such as highways and airports, as well as buried building foundations. Seismic methods do not perform well in heterogeneous settings where thin discontinuous layers may be missed. Additionally, depth penetration may be sacrificed due to lack of low-frequency seismic energy (Anderson 2014). Other tool-specific limitations are discussed below.

5.4.6.1 Seismic Reflection

Seismic reflection costs more than refraction and is limited to penetration depths generally greater than approximately 50 ft. At depths less than approximately 50 ft, reflections from subsurface density contrasts arrive at geophones at nearly the same time as the much higher amplitude ground roll (surface waves) and air blast (the sound of the shot). Reflections from greater depths arrive at geophones after the ground roll and air blast have passed, making these deeper targets easier to detect and delineate. It is possible to obtain seismic reflections from shallow depths, perhaps as shallow as 3 m to 5 m if the following activities are performed.

- Vary field techniques depending on depth.

- Contain the air blast.

- Place shots and geophones near shallow or surface groundwater.

- Use severe low-cut filters and arrays of a small number (one to five) of geophones.

- Ensure reflections are visible on the field records after all recording parameters are optimized.

- Guide data processing by the appearance of field records; use extreme care to avoid stacking refractions or other unwanted artifacts as reflections.

Additional disadvantages are as follows:

- Because many source and receiver locations must be used to produce meaningful images, reflection seismic observations can be expensive to acquire.

- Reflection seismic processing requires appropriate software and requires a relatively high level of expertise. Thus, processing observation data is relatively expensive.

- At later times in the seismic record, more noise is present, making the reflections difficult to extract from the unprocessed data.

5.4.6.2 Seismic Refraction

Seismic refraction is generally applicable only where the seismic velocities of layers increase with depth. Therefore, where higher velocity (for example, clay) layers may overlie lower velocity (for example, sand or gravel) layers, seismic refraction may yield incorrect results. Additionally, seismic refraction requires geophone arrays with lengths of approximately four to five times the depth to target of interest. This limits its application to mapping features at depths less than 100 ft. Greater depths are possible, but the required array lengths may exceed site dimensions, and the shot energy required to transmit seismic arrivals for the required distances may necessitate using large explosive charges. Furthermore, the lateral resolution of seismic refraction data degrades with increasing array length due to migration of seismic waves. Other disadvantages include:

- Observations require relatively large source-receiver off sets

- Observations are generally interpreted in terms of layers that can have dip and topography.

- Observations only use the arrival time of the initial ground motion at different distances from the source (off sets).

- A model for the subsurface is constructed by attempting to reproduce the observed arrival times.

5.4.6.3 MASW

Rayleigh wave amplitudes decrease exponentially with depth; therefore, resolution diminishes markedly with increasing depth. As a result, investigations using MASW are limited to much shallower depths than either reflection or refraction (Park et al. 2007). Consequently, the application of MASW is limited when identifying thin deep layers and depth of deeper interfaces. Because MASW data are recorded using receiver arrays and averaging (lateral and vertical) occurs during data acquisition, the use of MASW at complex sites where the bedrock depth and soil properties vary significantly is limited (Anderson 2014).

5.4.7 Cost

5.4.7.1 Seismic Reflection